Adding Language Models to your technology suite transforms user interactions. Get seamless, intelligent responses in your applications instantly.

Adding Language Models to your technology suite transforms user interactions. Get seamless, intelligent responses in your applications instantly.

Integrating language models into software projects can greatly enhance their functionality and user experience. By using language models in development, you can improve natural language processing, automate certain tasks, and create AI-powered coding projects.

Implementing language models into your applications can provide a wide range of benefits, including faster and more accurate processing of natural language requests, improved contextual understanding, and increased efficiency.

Language model API integration can enable you to incorporate language models into your coding projects, making it easier to create and implement AI-powered features. By adding language models to your coding projects, you can streamline processes, increase accuracy, and improve overall user experience.

The Importance of Language Models in Software Development

Artificial intelligence (AI) is changing the game for software developers worldwide. With the rise of AI-powered coding projects, it’s more important than ever to integrate language models into software development to enhance the coding experience and improve functionality.

Language models are a type of AI model that can understand and generate human language, making them essential for natural language processing tasks. They help developers create more intelligent applications by enabling them to understand and process human language in a way that is both sophisticated and intuitive.

Integrating language models into software development can improve the accuracy of tasks such as speech recognition, language translation, and sentiment analysis. By using language models in development, developers can create more intelligent applications that provide a more natural and intuitive user experience.

Overall, language models are an essential tool for developers looking to create AI-powered applications that are both sophisticated and intuitive. By incorporating language models into their projects, developers can create more intelligent and user-friendly applications that will better serve their users.

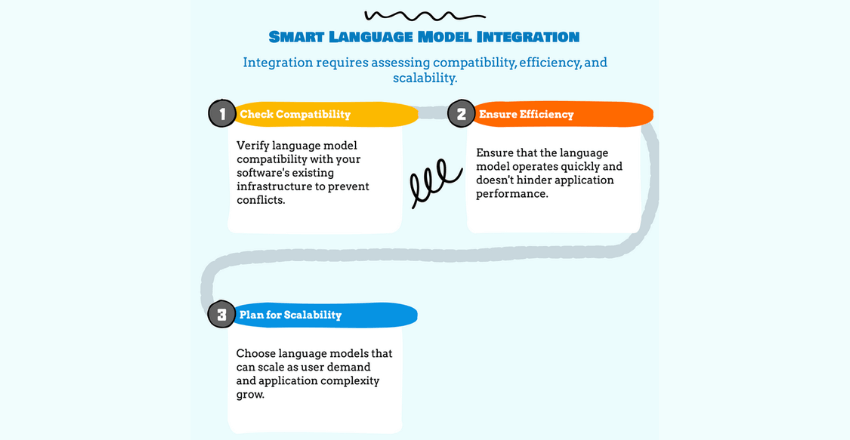

Considerations for Language Model Integration

Integrating language models into software projects can greatly enhance functionality and user experience. However, when incorporating language models into applications, there are several key considerations to keep in mind.

Compatibility

One of the most important factors to consider when integrating language models into your project is compatibility. Ensure that the language model API integration is feasible and compatible with your existing software infrastructure. Check the specifications and requirements of the language model and validate that they match with the existing infrastructure. This will help avoid conflicts and ensure smooth integration.

Efficiency

Another critical factor to consider is the efficiency of the language model. The language model integration should not slow down the application’s performance. The model should execute in a reasonable time and not be a burden to the system. Determine the appropriate resources required to run the language model and ensure that they are available and allocated optimally.

Scalability

Scalability is an important consideration when incorporating language models into applications. The language model should be scalable to meet increasing demands without significant changes to the existing infrastructure. This will help accommodate high-volume usage and future expansion. Consider the infrastructure requirements and functionality of the language model with respect to scalability.

By keeping these key considerations in mind, you can effectively integrate language models with your software projects and enhance the application’s functionality and user experience.

Research and Industry Examples of Language Models in Action

Language models have been successfully integrated into a wide range of software projects, with research and industry examples showcasing their potential benefits. In a recent study, researchers at Google used language models to improve the accuracy of speech recognition systems, achieving a significant reduction in error rates.

Similarly, Apple’s Siri virtual assistant uses language models to understand user requests and provide relevant responses, while Amazon’s Alexa leverages language models to enable natural language processing and personalized recommendations. Language models have also been used in finance, with financial institutions employing them to analyze and predict customer behavior and market trends.

Looking to the future, there are numerous exciting trends and advancements in the field of language model implementation. One significant development is the emergence of unsupervised learning, which enables language models to be trained without reliance on large datasets and annotations.

Another promising trend is the integration of language models with other AI technologies, such as computer vision and robotics. This has the potential to unlock new levels of functionality and automation in software projects, making them more powerful and user-friendly than ever before.

Best Practices for Adding Language Models to Your Project

Integrating language models into software projects can greatly improve functionality and enhance user experience. However, it is important to follow best practices to ensure the successful implementation of these powerful tools.

Consider the following guidelines when adding language models to your coding projects:

- Choose the Right Framework: Select a language model framework that best fits the needs of your project. Consider factors such as compatibility, efficiency, and scalability. Frameworks such as TensorFlow and PyTorch are popular choices that offer extensive support and documentation.

- Understand the Model: Before incorporating a language model into your project, make sure to have a clear understanding of how it works and what it can do. This will help you identify the best use cases and optimize its functionality.

- Plan for Data Storage: Language models require large amounts of data and can take up significant storage space. Plan ahead for data storage and ensure that your project has enough capacity to handle the added data load.

- Test and Validate: Thoroughly test and validate the language model integration to ensure that it is performing as intended. This will help you identify and address any performance or functionality issues before they become a problem.

- Refine Your Approach: Language models can be powerful tools, but it takes time and effort to refine their use in your project. Continuously evaluate performance, user experience, and functionality to identify areas for improvement and adjust your approach accordingly.

Implementing these best practices can help ensure a successful integration of language models into your coding project, resulting in improved functionality and enhanced user experience.

Challenges and Solutions in Language Model Integration

Integrating language models into software projects can present a variety of challenges, from compatibility issues with existing systems to scalability concerns. Here are some of the most common challenges encountered when incorporating language models into applications:

| Challenge | Solution |

|---|---|

| Compatibility with existing systems | Perform a thorough analysis of existing systems and ensure that the chosen language model framework is compatible with all components. |

| Efficient implementation | Optimize language model implementation by utilizing efficient algorithms and minimizing resource usage. |

| Scalability | Plan for future growth and ensure that the language model implementation can handle increasing amounts of data and user traffic. |

One additional challenge is ensuring that the language model is trained on relevant data that accurately represents the target user base. This can require significant resources and expertise.

However, there are several potential solutions to these challenges. For compatibility issues, consider using middleware or APIs to bridge the gap between systems. Efficient implementation can be achieved through careful algorithm selection and optimization. Scalability can be addressed through the use of cloud-based resources and distributed computing. Lastly, ensuring accurate data for language model training can be achieved through partnerships with relevant industry experts and data providers.

By understanding these challenges and potential solutions, developers can better integrate language models into their applications and achieve optimal functionality and performance.

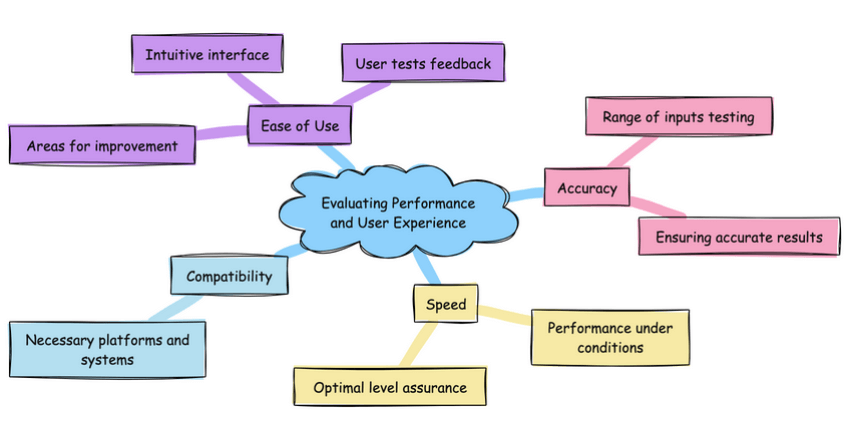

Evaluating Performance and User Experience

After integrating language models into your software project, it is crucial to evaluate their performance and impact on user experience. Here are some key factors to consider:

- Ease of Use: Your language model should be intuitive and easy to use. Consider conducting user tests to gather feedback on usability and identify any areas for improvement.

- Accuracy: Assess the accuracy of your language model by testing it with a range of inputs. This will help ensure that users receive the most accurate results.

- Speed: Speed is an important factor in the user experience. Test the speed of your language model under various conditions to ensure it is performing at an optimal level.

- Compatibility: Make sure that your language model is compatible with all necessary platforms and systems.

Example Code: Evaluating Language Model Performance

Below is an example of how you can evaluate the performance of your language model using Python:

# Import necessary libraries

import time

import sklearn.metrics as metrics

# Define input and expected output

input_text = "I want to order a pizza"

expected_output = "OrderConfirmationIntent"

# Time the prediction

start_time = time.time()

predicted_output = language_model.predict(input_text)

prediction_time = time.time() - start_time

# Evaluate accuracy

accuracy = metrics.accuracy_score(expected_output, predicted_output)

# Evaluate speed

print("Prediction time:", prediction_time)

# Print accuracy

print("Accuracy:", accuracy)In this example, the language model is timed for its prediction time and is scored on its accuracy. This provides useful feedback for evaluation and optimization.

Final Thoughts

Incorporating language models into your software projects can have a major impact on functionality and user experience. The future of language model integration looks promising, with ongoing advancements and innovations on the horizon.

External Resources

https://en.wikipedia.org/wiki/Language_model

FAQ

FAQ 1: How do I integrate a basic language model into my website for content generation?

Answer: Adding a language model for content generation on your website can significantly enhance user engagement by providing dynamic and relevant content. A simple way to start is by using the GPT-2 model from the transformers library by Hugging Face.

Here’s a Python example for generating content:

from transformers import GPT2LMHeadModel, GPT2Tokenizer

def generate_content(prompt, length=100):

tokenizer = GPT2Tokenizer.from_pretrained('gpt2')

model = GPT2LMHeadModel.from_pretrained('gpt2')

inputs = tokenizer.encode(prompt, return_tensors='pt')

outputs = model.generate(inputs, max_length=length, num_return_sequences=1)

return tokenizer.decode(outputs[0], skip_special_tokens=True)

# Example usage

print(generate_content("The future of technology is", 50))This code loads the GPT-2 model and tokenizer, then generates text based on a given prompt. You can adjust the length parameter to control the output size. This example can be integrated into a backend server to dynamically generate content for your website.

FAQ 2: How can I add a language model to my app for improved search functionality?

Answer: Enhancing search functionality in your app with a language model can provide users with more accurate and contextually relevant results. For instance, BERT (Bidirectional Encoder Representations from Transformers) can be used to understand the intent behind search queries. While integrating BERT directly into an app might be complex due to its size, you can use a distilled version or API services that offer BERT capabilities.

Here’s an example using the transformers library:

from transformers import BertForQuestionAnswering, BertTokenizer

import torch

def search_documents(question, document):

tokenizer = BertTokenizer.from_pretrained('bert-large-uncased-whole-word-masking-finetuned-squad')

model = BertForQuestionAnswering.from_pretrained('bert-large-uncased-whole-word-masking-finetuned-squad')

input_ids = tokenizer.encode(question, document)

tokens = tokenizer.convert_ids_to_tokens(input_ids)

# Search the input IDs for the first instance of the `[SEP]` token.

sep_index = input_ids.index(tokenizer.sep_token_id)

num_seg_a = sep_index + 1

num_seg_b = len(input_ids) - num_seg_a

segment_ids = [0]*num_seg_a + [1]*num_seg_b

assert len(segment_ids) == len(input_ids)

outputs = model(torch.tensor([input_ids]), token_type_ids=torch.tensor([segment_ids]))

answer_start = torch.argmax(outputs.start_logits)

answer_end = torch.argmax(outputs.end_logits) + 1

return ' '.join(tokens[answer_start:answer_end])

# Example usage

question = "What is AI?"

document = "AI, or Artificial Intelligence, is a branch of computer science that aims to create intelligent machines."

print(search_documents(question, document))This code snippet demonstrates how to use BERT for a question-answering system, which can be adapted for complex search functionality in apps. It takes a question and a document (the content to search through) and returns the part of the document that answers the question.

FAQ 3: How do I use language models for sentiment analysis in customer feedback?

Answer: Sentiment analysis is a valuable tool for understanding customer feedback, and language models like BERT can be highly effective for this task. Here’s how you can implement sentiment analysis using the transformers library:

from transformers import pipeline

def analyze_sentiment(text):

classifier = pipeline('sentiment-analysis')

return classifier(text)

# Example usage

feedback = "I love this product! It has changed my life for the better."

print(analyze_sentiment(feedback))This example uses the pipeline function from the transformers library to create a sentiment analysis classifier. It’s a quick and efficient way to implement sentiment analysis, requiring only a few lines of code. The classifier returns a label (positive/negative) and a score indicating the sentiment of the provided text, which can be integrated into your app to automatically analyze customer feedback.

Jane Watson is a seasoned expert in AI development and a prominent author for the “Hire AI Developer” blog. With over a decade of experience in the field, Jane has established herself as a leading authority in AI app and website development, as well as AI backend integrations. Her expertise extends to managing dedicated development teams, including AI developers, Machine Learning (ML) specialists, and other supporting roles such as QA and product managers. Jane’s primary focus is on providing professional and experienced English-speaking AI developers to companies in the USA, Canada, and the UK.

Jane’s journey with AI began during her time at Duke University, where she pursued her studies in computer science. Her passion for AI grew exponentially as she delved into the intricacies of the subject. Over the years, she honed her skills and gained invaluable experience working with renowned companies such as Activision and the NSA. These experiences allowed her to master the art of integrating existing systems with AI APIs, solidifying her reputation as a versatile and resourceful AI professional.

Currently residing in the vibrant city of Los Angeles, Jane finds solace in her role as an author and developer. Outside of her professional pursuits, she cherishes the time spent with her two daughters, exploring the beautiful hills surrounding the city. Jane’s dedication to the advancement of AI technology, combined with her wealth of knowledge and experience, makes her an invaluable asset to the “Hire AI Developer” team and a trusted resource for readers seeking insights into the world of AI.