AI Backend Integration Solutions redefine innovation. Empower your team, optimize processes, and drive growth through strategic AI implementation.

As businesses increasingly rely on artificial intelligence (AI) to optimize their operations, it’s critical to understand how AI can be integrated into backend systems to maximize its value. AI backend integration solutions offer a range of benefits, including process automation, data analysis, and intelligent decision-making.

The Role of AI in Backend Development

Artificial Intelligence (AI) has become a game-changer for backend development. Incorporating AI into backend systems has opened up a world of possibilities, from automating routine tasks to performing complex data analysis.

With the right AI backend integration services, businesses can streamline their operations, improve their customer experiences, and make better decisions.

One of the main benefits of incorporating AI into backend systems is the ability to automate tasks that are repetitive or time-consuming. For instance, using natural language processing (NLP) algorithms, chatbots can be developed and integrated into a business’s backend system to handle customer queries in real-time.

In the same vein, AI can help automate processes such as scheduling, billing, and data entry, freeing up staff to focus on more complex tasks.

Furthermore, incorporating AI into backend systems can enable businesses to perform complex data analysis with ease. AI algorithms can sift through vast amounts of data, making correlations and identifying patterns that humans may miss.

This can help businesses make data-driven decisions faster, improving their overall efficiency and performance.

The Benefits of AI Backend Integration Services

AI backend integration services offer businesses several benefits, including:

- Increased efficiency and productivity

- Improved customer experiences

- Better decision-making through data analysis

- Cost savings from automating routine tasks

Example 1: AI-Powered Chatbot Integration

This example uses Python with Flask to integrate a simple AI-powered chatbot into a backend system, leveraging the natural language processing (NLP) capabilities of the transformers library by Hugging Face.

from flask import Flask, request, jsonify

from transformers import pipeline

app = Flask(__name__)

# Initialize the chatbot

chatbot = pipeline("conversational", model="microsoft/DialoGPT-medium")

@app.route('/chatbot', methods=['POST'])

def chat_with_bot():

user_input = request.json.get('message', '')

response = chatbot(user_input)[0]['generated_text']

return jsonify({'response': response})

if __name__ == '__main__':

app.run(debug=True)

This code snippet creates a web service that allows communication with an AI chatbot. When a user sends a message to the /chatbot endpoint, the backend processes the message through the DialoGPT model and returns a coherent, contextually relevant response. This setup can handle customer queries, provide information, or assist with navigation on a website.

Example 2: Automated Data Analysis with Machine Learning

The following Python code demonstrates how AI can be used for automated data analysis in backend systems, utilizing the scikit-learn library to perform predictive analysis.

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import accuracy_score

# Load dataset

iris = load_iris()

X = iris.data

y = iris.target

# Split dataset into training and testing set

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Initialize and train the model

model = RandomForestClassifier(n_estimators=100)

model.fit(X_train, y_train)

# Make predictions

predictions = model.predict(X_test)

# Evaluate the model

print("Accuracy:", accuracy_score(y_test, predictions))This example uses the Iris dataset to train a RandomForestClassifier, a versatile machine learning model capable of handling classification tasks. After training, the model predicts the class of Iris plants based on new data inputs. This approach can be adapted to analyze business data, enabling automated decision-making based on historical data trends.

Both examples illustrate the transformative potential of AI in backend development, from enhancing user engagement through intelligent interactions to leveraging machine learning for insightful data analysis. These integrations not only streamline operations but also open new avenues for innovation and efficiency in business processes.

By incorporating AI into their backend systems, businesses can unlock their full potential and gain a competitive advantage.

AI Backend Integration Services and Frameworks

There are various frameworks and programming languages that can be used for AI backend integration, including Python, Java, and TensorFlow. Each framework has its own features and capabilities, making them suitable for different applications.

For instance, TensorFlow is a popular open-source framework for machine learning that can be used to build neural networks and perform data analysis.

When choosing a framework or programming language for AI backend development, it’s essential to consider factors such as scalability, performance, and maintenance. Working with AI backend experts can help ensure that the chosen framework is well-suited to the business’s needs and will deliver maximum value.

Frameworks for AI Backend Integration

When it comes to AI backend integration, choosing the right framework is crucial. Frameworks serve as a foundation for building and deploying AI models in backend systems. They provide a set of predefined tools and libraries that can be used to develop, train, and test AI algorithms. There are several popular frameworks available for AI backend integration, each with its own unique features and capabilities.

TensorFlow

TensorFlow is a widely used and highly popular framework for AI backend integration. It is an open-source library developed by Google that provides a range of tools for building and deploying AI models. TensorFlow has APIs for several programming languages, including Python, C++, and Java. It also supports distributed computing, making it ideal for large-scale backend systems.

Here is an example of how TensorFlow can be used to create a neural network:

To demonstrate how TensorFlow can be utilized to create a neural network, let’s walk through a basic example. This code snippet will showcase the creation of a simple neural network using TensorFlow, which could serve as a foundational element for more complex backend tasks, such as predictive analytics or automated decision-making processes.

TensorFlow’s flexible architecture allows for easy deployment of computation across a variety of platforms (CPUs, GPUs, TPUs), making it an ideal choice for backend development.

The following example builds a neural network model that is capable of classifying images from the MNIST dataset, a large database of handwritten digits that is commonly used for training various image processing systems.

import tensorflow as tf

from tensorflow.keras.datasets import mnist

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Flatten

from tensorflow.keras.utils import to_categorical

# Load data

(train_images, train_labels), (test_images, test_labels) = mnist.load_data()

# Normalize the images.

train_images = train_images / 255.0

test_images = test_images / 255.0

# Convert labels to one-hot encoding

train_labels = to_categorical(train_labels)

test_labels = to_categorical(test_labels)

# Build the model

model = Sequential([

Flatten(input_shape=(28, 28)), # Input layer, flattens the image to a vector for the dense layer

Dense(128, activation='relu'), # Hidden layer with 128 neurons and ReLU activation

Dense(10, activation='softmax') # Output layer with 10 neurons for each digit and softmax activation

])

# Compile the model

model.compile(optimizer='adam',

loss='categorical_crossentropy',

metrics=['accuracy'])

# Train the model

model.fit(train_images, train_labels, epochs=5, validation_data=(test_images, test_labels))

# Evaluate the model

test_loss, test_acc = model.evaluate(test_images, test_labels, verbose=2)

print('\nTest accuracy:', test_acc)This code accomplishes the following:

- Load and Preprocess Data: It starts by loading the MNIST dataset and normalizing the images. Normalizing the image data involves scaling the pixel values to a range of 0 to 1 to make the neural network model train faster.

- Build the Neural Network Model: A

Sequentialmodel is created, consisting of a flattening layer to convert 2D images into 1D vectors, followed by two dense layers. The first dense layer is a hidden layer with 128 neurons and uses the ReLU activation function. The second dense layer is the output layer with 10 neurons corresponding to the 10 possible classes of digits (0 through 9), using the softmax activation function for multi-class classification. - Compile the Model: The model is compiled with the Adam optimizer, the categorical crossentropy loss function (suitable for multi-class classification), and accuracy as the metric for evaluation.

- Train the Model: The model is trained using the training data with a specified number of epochs (iterations over the entire dataset).

- Evaluate the Model: Finally, the model’s performance is evaluated on the test dataset to see how well it generalizes to new, unseen data.

This example provides a clear illustration of how TensorFlow can be leveraged to build and train a neural network, which is a crucial capability for many AI-driven backend development tasks.

PyTorch

PyTorch is another popular open-source framework for AI backend integration. It is developed by Facebook and is known for its ease of use and simplicity. PyTorch supports dynamic computation graphs, which make it easier to debug and modify AI models during development.

It has APIs for Python and C++, making it a versatile choice for backend systems.

Here is an example of how PyTorch can be used to create a convolutional neural network:

To illustrate how PyTorch can be used to create a convolutional neural network (CNN), let’s design a simple CNN model for classifying images from the CIFAR-10 dataset.

This dataset consists of 60000 32×32 color images in 10 classes, with 6000 images per class. PyTorch provides a comprehensive ecosystem for model development and training, including automatic differentiation to compute gradients—essential for backpropagation in neural networks.

Below is a basic example that outlines the creation, compilation, and training of a CNN using PyTorch. This model will include convolutional layers, activation functions, pooling layers, and fully connected layers to classify the images into one of the ten categories.

import torch

import torchvision

import torchvision.transforms as transforms

import torch.nn as nn

import torch.optim as optim

# Transform the data to torch tensors and normalize it

transform = transforms.Compose(

[transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])

# Load CIFAR-10 data

trainset = torchvision.datasets.CIFAR10(root='./data', train=True,

download=True, transform=transform)

trainloader = torch.utils.data.DataLoader(trainset, batch_size=4,

shuffle=True)

testset = torchvision.datasets.CIFAR10(root='./data', train=False,

download=True, transform=transform)

testloader = torch.utils.data.DataLoader(testset, batch_size=4,

shuffle=False)

# Define the CNN structure

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(3, 6, 5) # Input channels = 3, output channels = 6, kernel size = 5

self.pool = nn.MaxPool2d(2, 2) # Pooling with window of 2x2

self.conv2 = nn.Conv2d(6, 16, 5)

self.fc1 = nn.Linear(16 * 5 * 5, 120) # Fully connected layer, input size = 16*5*5, output size = 120

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10) # Output layer, 10 classes

def forward(self, x):

x = self.pool(nn.functional.relu(self.conv1(x)))

x = self.pool(nn.functional.relu(self.conv2(x)))

x = x.view(-1, 16 * 5 * 5) # Flatten the tensor for the fully connected layer

x = nn.functional.relu(self.fc1(x))

x = nn.functional.relu(self.fc2(x))

x = self.fc3(x)

return x

net = Net()

# Define a Loss function and optimizer

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(net.parameters(), lr=0.001, momentum=0.9)

# Train the network

for epoch in range(2): # loop over the dataset multiple times

running_loss = 0.0

for i, data in enumerate(trainloader, 0):

# get the inputs; data is a list of [inputs, labels]

inputs, labels = data

# zero the parameter gradients

optimizer.zero_grad()

# forward + backward + optimize

outputs = net(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

# print statistics

running_loss += loss.item()

if i % 2000 == 1999: # print every 2000 mini-batches

print(f'[{epoch + 1}, {i + 1:5d}] loss: {running_loss / 2000:.3f}')

running_loss = 0.0

print('Finished Training')

# Save the trained model

PATH = './cifar_net.pth'

torch.save(net.state_dict(), PATH)This PyTorch script does the following:

- Data Preparation: It starts by loading and normalizing the CIFAR-10 dataset using torchvision.

- CNN Architecture: Defines a

Netclass as the CNN model, which includes two convolutional layers (conv1andconv2), one pooling layer (pool), and three fully connected layers (fc1,fc2, andfc3). Theforwardmethod defines the forward pass of the network. - Training: Initializes a cross-entropy loss function and an SGD optimizer. It then trains the network for 2 epochs, using a loop to iterate over the training data, calculate the loss, perform backpropagation, and update the model parameters.

- Model Saving: After training, the model’s parameters are saved to a file, allowing for later use in prediction or further training.

This example showcases how PyTorch’s flexibility and powerful libraries facilitate the development and training of sophisticated CNN models for complex image classification tasks, making it a valuable tool for backend AI development.

Keras

Keras is a high-level framework for AI backend integration that is built on top of TensorFlow. It provides a simple and intuitive API for building and deploying AI models. Keras has APIs for several programming languages, including Python, R, and Java. It is ideal for rapid prototyping and experimentation in backend systems.

Here is an example of how Keras can be used to create a deep neural network:

To illustrate how Keras can be used to create a deep neural network (DNN), we’ll construct a model designed for a generic classification task. Keras, now integrated into TensorFlow as tf.keras, simplifies the process of building and training deep learning models with its high-level, user-friendly API.

This example will focus on creating a DNN to classify instances into one of several possible categories based on their features. It’s a straightforward example, assuming you have a dataset split into features (X) and labels (y), where y are encoded as one-hot vectors for multi-class classification.

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

from tensorflow.keras.optimizers import Adam

# Assuming X_train, y_train, X_test, y_test are preloaded datasets

# Define the model

model = Sequential()

model.add(Dense(512, activation='relu', input_shape=(X_train.shape[1],)))

model.add(Dense(256, activation='relu'))

model.add(Dense(128, activation='relu'))

model.add(Dense(y_train.shape[1], activation='softmax')) # Output layer size should match the number of classes

# Compile the model

model.compile(optimizer=Adam(),

loss='categorical_crossentropy',

metrics=['accuracy'])

# Train the model

history = model.fit(X_train, y_train, epochs=10, validation_data=(X_test, y_test))

# Evaluate the model

test_loss, test_accuracy = model.evaluate(X_test, y_test, verbose=2)

print(f'Test Accuracy: {test_accuracy*100:.2f}%')Breakdown of the Code:

- Model Definition: We use the

Sequentialmodel, which is suitable for a stack of layers where each layer has exactly one input tensor and one output tensor. The model consists of fourDenselayers, indicative of a fully connected neural network. The first layer specifies the input shape (input_shape) that matches the feature size of your dataset. - Activation Functions: The ReLU (Rectified Linear Unit) activation function is used in the hidden layers to introduce non-linearity, helping the network learn complex patterns. The output layer uses the softmax activation, which is standard for multi-class classification problems, as it outputs a probability distribution over the classes.

- Compilation: The model is compiled with the Adam optimizer, a popular choice for deep learning tasks due to its efficiency. The loss function used is

categorical_crossentropy, appropriate for multi-class classification where the labels are one-hot encoded. The metric ‘accuracy’ is specified to monitor the training and testing process. - Training: The model is trained using the

fitmethod, where we pass the training data (X_train,y_train), the number of epochs (iterations over the entire dataset), and the validation data. This process iterates over the dataset, making updates to the model weights to minimize the loss function. - Evaluation: Finally, the model’s performance is evaluated on the test set to determine its accuracy.

This example demonstrates the simplicity of using Keras to build and train a deep neural network for classification tasks, providing a powerful tool for a wide range of applications in AI and machine learning.

Challenges in AI Backend Integration

While the benefits of AI backend integration are clear, it is important to acknowledge and address the challenges that organizations may encounter during the process.

The following are some of the most significant challenges:

- Data compatibility: AI requires large amounts of high-quality data to train models effectively. However, legacy systems may not be designed to handle the data formats that AI requires, making data integration a significant challenge.

- Scalability: As AI models become more complex and require more data, backend systems must be able to scale to handle the increased demands. This can be particularly challenging for organizations with limited resources or those that rely on outdated systems.

- Security: Protecting sensitive data is a priority for all businesses, but incorporating AI into backend systems can create new security risks. Organizations must ensure that their AI backend integration solutions are secure and comply with relevant data protection regulations.

Overcoming these challenges requires careful planning, collaboration, and expertise. It is important for organizations to work with AI backend experts who understand both the technical and business challenges of AI integration.

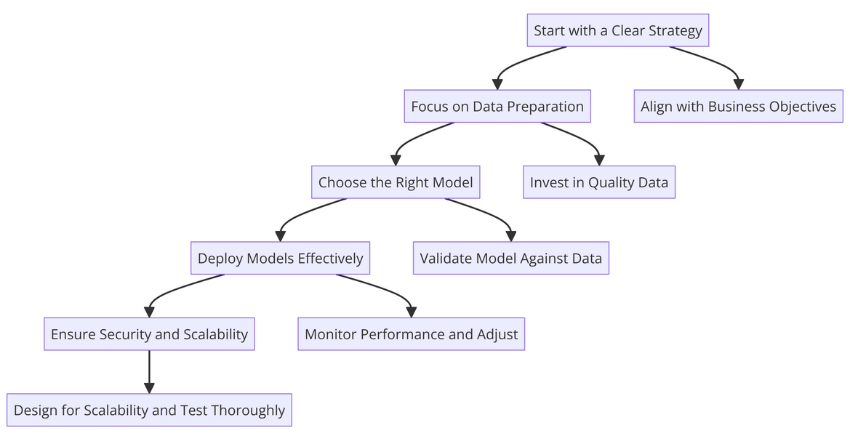

Best Practices for AI Backend Integration

Integrating AI into backend systems can be a complex and challenging process, but there are best practices that businesses can follow to ensure successful implementation. These best practices include:

- Start with a clear strategy: Before beginning your AI integration journey, it’s important to have a clear strategy in place that outlines your goals, objectives, and expected outcomes. This strategy should be aligned with your business objectives and take into account any challenges or limitations that may arise.

- Focus on data preparation: One of the most crucial steps in AI backend integration is data preparation. This involves collecting, cleaning, and organizing data in preparation for model training. High-quality data is essential for accurate model outputs, so it’s important to invest time and resources in this stage.

- Choose the right model: There are a variety of AI models available, each of which has its strengths and weaknesses. It’s important to choose the right model for your specific use case and to validate it against your data to ensure it’s the best fit for your needs.

- Deploy models effectively: Once you’ve chosen the right model, it’s important to deploy it effectively. This means ensuring it’s integrated seamlessly into your backend system and runs efficiently. It’s also important to monitor its performance and make adjustments as needed.

- Ensure security and scalability: As with any backend system, it’s important to ensure that your AI backend integration solution is secure, scalable, and can handle changing demands over time. This means putting in place proper security measures, designing for scalability, and testing thoroughly.

By following these best practices, businesses can ensure a smooth and effective AI backend integration process that delivers tangible benefits to their operations.

Use Cases of AI Backend Integration

AI backend integration has shown significant promise across various industries, helping businesses drive efficiency, enhance decision-making processes, and improve customer experiences. Below are some examples of how AI has been integrated into backend systems:

Finance Industry

The finance industry has been a significant adopter of AI backend integration to automate complex processes and enhance customer experiences. One example is fraud detection, where AI algorithms are used to analyze thousands of data points in real-time to detect and prevent fraudulent activities. AI is also used in predicting market trends, optimizing investment portfolios, and automating back-office operations, such as loan processing and claims management.

Retail Industry

Retail businesses are leveraging AI backend integration to optimize their supply chain by predicting demand and managing inventory more efficiently. AI algorithms are also used to enhance customer experiences, such as personalized recommendations based on browsing and purchase history, and chatbots to assist customers with inquiries and complaints.

Healthcare Industry

AI backend integration has the potential to revolutionize the healthcare industry, from improving patient outcomes to optimizing resource utilization. AI is used to automate diagnosis, analyze medical images and data, and predict patient readmission rates. AI algorithms can also be utilized in drug discovery, clinical trial optimization, and resource management.

Manufacturing Industry

The manufacturing industry is another area where AI backend integration is being used to improve efficiency and optimize operations. AI algorithms are used to predict equipment failure, minimize downtime, and optimize production schedules. AI is also used in optimizing supply chain management, quality control, and inventory management.

These examples showcase the potential benefits of AI backend integration for businesses across various industries. By leveraging AI technologies, businesses can improve their operational efficiency, enhance customer experiences, and drive growth.

Choosing the Right AI Backend Integration Solution Provider

When it comes to AI backend integration, choosing the right solution provider is crucial. Your chosen provider will play a key role in the success of your integration project, so it’s important to take the time to evaluate potential partners carefully.

Here are some criteria to consider when selecting an AI backend integration solution provider:

- Expertise: Look for a provider with extensive experience in AI backend integration. They should have a deep understanding of the latest AI technologies and how to implement them effectively in backend systems.

- Experience: Consider the provider’s track record in delivering successful AI integration projects. Ask for case studies or customer references to get a sense of their previous work and how it aligns with your business needs.

- Communication: Strong communication and collaboration are critical to the success of an AI backend integration project. Ensure the provider has clear processes for communication and that they are transparent and responsive throughout the project.

- Scalability: As your business grows, so will your AI backend integration needs. Look for a provider who can scale their solutions and services to meet your evolving requirements.

- Flexibility: Every business has unique backend systems and processes. Look for a provider who can offer flexible solutions that can be customized to your specific needs.

Remember, choosing the right provider is only the first step. Effective collaboration and communication throughout the project will be essential to achieving a successful AI backend integration. Regular progress updates, testing, and continuous improvement will ensure that the project is on track and delivering the desired outcomes.

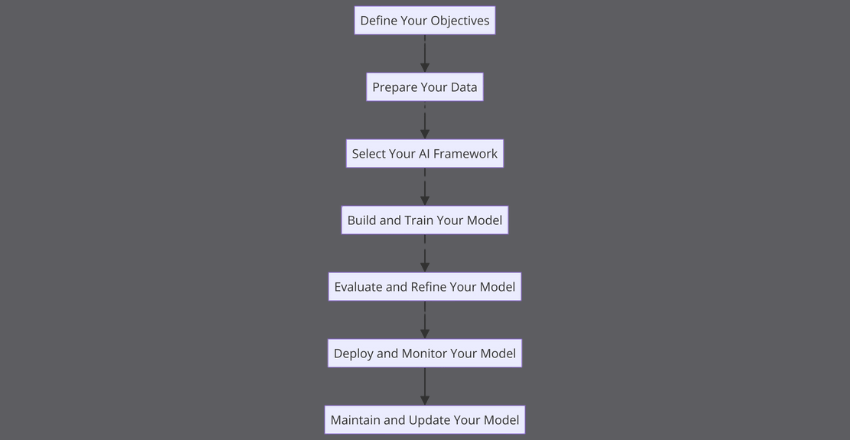

Implementing AI Backend Integration: Step-by-Step Guide

Integrating AI into backend systems can be a complex process that requires careful planning and execution. Here, we offer a step-by-step guide to help businesses successfully implement AI backend integration solutions.

Step 1: Define Your Objectives

The first step in implementing AI backend integration is to define your objectives. Determine what areas of your backend system could benefit from AI enhancements and what specific outcomes you hope to achieve. This will help guide your selection of frameworks and solution providers.

Step 2: Prepare Your Data

Preparing your data for AI integration is crucial for achieving accurate results. This involves cleaning and organizing your data, as well as formatting it in a way that is compatible with your chosen AI framework. Use code examples to illustrate how data preparation can be done in different programming languages.

Step 3: Select Your AI Framework

Choose an AI framework that is well-suited to your objectives and data. Consider factors such as the framework’s capabilities, ease of use, and level of support. When comparing two frameworks or languages, give code examples in both to demonstrate the differences and benefits of each.

Step 4: Build and Train Your Model

Use your chosen AI framework to build and train your model. This involves selecting the appropriate algorithms and inputting your prepared data. Demonstrate how to use code examples to build and train a model.

Step 5: Evaluate and Refine Your Model

Evaluate your model’s performance and refine it as needed. This involves testing the model with new data and making adjustments to improve accuracy and efficiency. Provide code examples to illustrate how to evaluate and refine a model.

Step 6: Deploy and Monitor Your Model

Deploy your model into your backend system and monitor its performance. This involves setting up automated processes to continually feed new data into the model and analyzing its output. Provide code examples to illustrate how to deploy and monitor a model in the backend system.

Step 7: Maintain and Update Your Model

Maintain and update your model to ensure ongoing optimal performance. This involves monitoring for changes in data patterns, making updates to algorithms or parameters as needed, and periodically retraining the model. Provide code examples to illustrate how to maintain and update a model in the backend system.

Successfully implementing AI backend integration solutions requires a careful and thorough approach. By following these steps and seeking the assistance of experienced AI backend integration solution providers, businesses can reap the many benefits that AI has to offer.

Final Thoughts

AI backend integration solutions have the potential to revolutionize business operations and unlock untapped potential. By integrating AI into backend systems, businesses can automate processes, analyze data, and make intelligent predictions. This translates into increased productivity, better decision-making, and enhanced customer experiences.

However, AI backend integration is not without its challenges. Issues related to data compatibility, scalability, and security must be addressed to ensure a successful integration. Therefore, adopting best practices and selecting the right solution provider is crucial.

Research and industry examples have shown how different businesses have reaped the benefits of AI backend integration. For instance, a healthcare provider used AI to improve patient care, while a finance company used it to enhance fraud detection. Such examples serve as a testament to the potential impact of AI backend integration.

External Resources

https://stackoverflow.blog/2020/10/12/how-to-put-machine-learning-models-into-production/

FAQ

FAQ 1: How do I integrate an AI model into my existing backend?

Answer:

Integrating an AI model into your backend involves a few key steps. First, ensure your backend can communicate with the AI model, typically via API calls. Here’s a basic example using Python Flask for a REST API endpoint that interacts with an AI model:

from flask import Flask, request, jsonify

import your_ai_model # Import your AI model here

app = Flask(__name__)

@app.route('/predict', methods=['POST'])

def predict():

data = request.json

prediction = your_ai_model.predict(data['input'])

return jsonify({'prediction': prediction})

if __name__ == '__main__':

app.run(debug=True)This code snippet creates a simple server that listens for POST requests on /predict. When it receives data, it uses the imported your_ai_model to make a prediction and returns the result.

FAQ 2: How can I ensure my AI backend scales with user demand?

Answer:

Scaling your AI backend to handle varying loads involves containerization and orchestration tools like Docker and Kubernetes. Here’s a basic Dockerfile example for containerizing your AI backend application:

# Use an official Python runtime as a parent image

FROM python:3.8-slim

# Set the working directory in the container

WORKDIR /app

# Copy the current directory contents into the container at /app

COPY . /app

# Install any needed packages specified in requirements.txt

RUN pip install --trusted-host pypi.python.org -r requirements.txt

# Make port 80 available to the world outside this container

EXPOSE 80

# Define environment variable

ENV NAME World

# Run app.py when the container launches

CMD ["python", "app.py"]This Dockerfile creates a Docker image that contains your AI backend, ready to be deployed and scaled with Kubernetes. It ensures that your application can be easily replicated and managed across different environments, handling increasing loads efficiently.

FAQ 3: How do I secure communications between my frontend and AI backend?

Answer:

Securing communication involves implementing HTTPS for your API endpoints and using authentication tokens. Here’s how you could modify a Flask app to require a token:

from flask import Flask, request, jsonify, abort

from functools import wraps

app = Flask(__name__)

def require_api_token(func):

@wraps(func)

def check_token(*args, **kwargs):

if 'API-Token' not in request.headers or request.headers['API-Token'] != 'YourSecretToken':

abort(401) # Unauthorized

return func(*args, **kwargs)

return check_token

@app.route('/secure-predict', methods=['POST'])

@require_api_token

def secure_predict():

data = request.json

prediction = 'Your AI Model Prediction Logic Here'

return jsonify({'secure_prediction': prediction})

if __name__ == '__main__':

app.run(ssl_context='adhoc')This example adds a decorator that checks for a specific ‘API-Token’ in the request headers. It ensures that only requests with the correct token can access the /secure-predict endpoint, providing a layer of security.

Additionally, running the app with ssl_context='adhoc' enables HTTPS, encrypting data in transit.

Jane Watson is a seasoned expert in AI development and a prominent author for the “Hire AI Developer” blog. With over a decade of experience in the field, Jane has established herself as a leading authority in AI app and website development, as well as AI backend integrations. Her expertise extends to managing dedicated development teams, including AI developers, Machine Learning (ML) specialists, and other supporting roles such as QA and product managers. Jane’s primary focus is on providing professional and experienced English-speaking AI developers to companies in the USA, Canada, and the UK.

Jane’s journey with AI began during her time at Duke University, where she pursued her studies in computer science. Her passion for AI grew exponentially as she delved into the intricacies of the subject. Over the years, she honed her skills and gained invaluable experience working with renowned companies such as Activision and the NSA. These experiences allowed her to master the art of integrating existing systems with AI APIs, solidifying her reputation as a versatile and resourceful AI professional.

Currently residing in the vibrant city of Los Angeles, Jane finds solace in her role as an author and developer. Outside of her professional pursuits, she cherishes the time spent with her two daughters, exploring the beautiful hills surrounding the city. Jane’s dedication to the advancement of AI technology, combined with her wealth of knowledge and experience, makes her an invaluable asset to the “Hire AI Developer” team and a trusted resource for readers seeking insights into the world of AI.